Whole Hand Haptics with True 3D Displays

Project Vision: To make virtual object interaction resemble real life

Our vision is a new interaction paradigm that gives the user the ability to touch and manipulate high contrast, high resolution, three-dimensional (3D) virtual objects suspended in space, using a glove that gives realistic whole hand haptic feedback closely resembling interaction with objects in real life.

The system will consist of two main components: The first is a whole hand haptic system comprising a glove mounted on a robot arm that gives the user force feedback during manipulation of an object and a tactile array subsystem that renders textures to the fingertips. The second component is a three-dimensional display based on a holographic optical element (HOE) that permits the user glasses-free interaction with a virtual object by reaching into the object with the gloved hand. We also use the haptic glove with a 3D stereo system with a more traditional 3D display comprising a semi-transparent mirror and stereo glasses.

Haptic Glove

Hardware

|

|

|

|

| (a) | (b) | (c) | (d) |

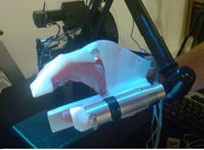

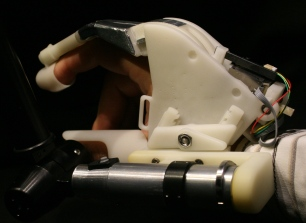

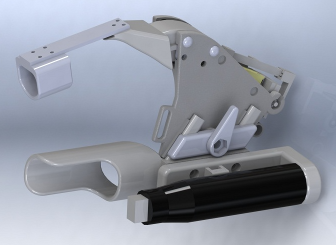

| Figure 1: Glove generations: (a) first generation; (b) second generation; (c) third generation; (d) CAD model of fourth generation. | |||

We designed, built and evaluated four different generations of on joint for the glove. The first generation (Figure 1a) was mainly intended to evaluate the use of a piezoelectric motor (LEGS motor from PiezoMotor AB) for grip force control in the glove interface. The long term goal of motorization of several joints in the same glove necessitated a modular design and rapid prototyping plastic to permit short design iteration cycles. Special attention was paid to the integration of all the sensors and actuators in a small volume to prepare for more advanced exoskeleton gloves. The evaluation of this first solution shows that piezo technology is well suited to haptic grip control yielding the required stiffness with a very high dynamic speed range. However, this design is sensitive to play in the joints between the actuator unit and end effector, which is inevitable with rapid prototyping components.

In the second generation the force sensor was moved closer to the end effector to reduce the effects from play and elastic deformation (Figure 1b). Still, the resilience of the plastic details between force cell and end effector was noticeable, requiring two more design and production iterations. In the third generation, the force sensor was replaced with a sensor using force sensitive ink printed on a flexible polymer carrier (Figure 1c). The force sensor was placed close to the fingertip to reduce effects from elastic deformation. This force sensor type has the advantage that it could be integrated in rather complex mechanical constructions without the need for large extra space. To reduce play in joints and plastic deformation of the more critical mechanical details some parts were redesigned in steel and aluminum. The evaluation of the third generation clearly indicates that the desired haptic perception could be reached with this type of solution, with most artifacts attributable to the flexible force sensor. This was later verified in the fourth and last version where a high quality strain gauge force sensor replaced the force sensitive ink on the polymer carries. In this last design (Figure 1d) the resilience between end-effector and force cell is negligible and most previously observed artifacts are avoided. The mechanical play and the observed plastic deformation could be removed by producing the plastic parts by injection molding of reinforced material, which would be the case in a final product.

The two main remaining issues with the glove are audible noise and speed. The motor used in the glove prototypes is a standard component for high precision movements and the maximum speed does not allow for very fast finger movements. The operation frequency of the motor for typical finger movements is in the kHz range which gives an audible noise. To address these two issues we designed a new ultrasonic motor to reach speeds greater than 100 mm/s and a stall force above 4 N.

The haptic glove is mounted on a robot arm, the Phantom Premium 1.5/6DOF, giving a total of seven DOF (degrees-of-Freedom), six from the robot arm and the seventh from the gripping with the thumb and index fingers with the glove (Figures 2 and 3).

|

|

| Figure 2: SensAble Phantom Premium 1.5/6DOF. | Figure 3: The glove attached to the Phantom. |

In summary, the proto-type admittance-type haptic glove with a compact integrated piezo-electric motor can produce both accurate force-displacement responses of non-linear elastic material stiffness and a fast and stable response to an applied load.

Software

In addition to basic software to control the glove, we developed and tested haptic functions, such as gripping of 3D objects with haptic feedback (Figure 4) and squeezing of 3D objects with varying stiffness (Figure 5). We showed that the glove can realistically display load-deflection curves obtained from physical samples, and how linear compliance compensation in the control loop can further improve the accuracy.

| Figure 4: Video: Interaction with a 3D virtual cube. The user may lift and manipulate the virtual cube. A physics simulation including weight, inertia, and gravity adds to the realism. |

| Figure 5: Video: The user squeezes a ball which may have different stiffness properties. |

3D Holographic Display

|

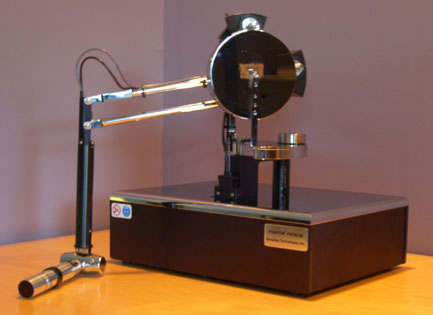

| Figure 6: Holographic display prototype. |

The holographic optical element (HOE) acts as a projection screen for a number of digital projectors (Figure 6), and each projector image can be viewed within a narrow angle representing one view of a 3D object. With multiple, properly spaced, projectors each eye receives a separate view and the user perceives a 3D model suspended in space above the holographic plate without any obstacle between the viewer and the image, allowing him/her to reach into the 3D model. An increase in the number of projectors extends the field of view and allows viewing of the object from different angles. The 3D model can be manipulated in real time using graphics capabilities of modern computer systems. More information about the display technology can be found under "Examples of visualisation activities at KTH schools" at the following link.

We evaluated the display prototype and found its visual quality comparable to more common display types. The significant display latency in the prototype could mainly be attributed to the projectors and is something that should be addressed in future display generations. We also investigated the use of head-tracking to smooth the view transitions and give the user better look-around.

People

- Ingrid Carlbom, Centre for Image Analysis (Project Leader)

- Ewert Bengtsson, Centre for Image Analysis (Assistant Project Leader)

- Ingela Nyström, Centre for Image Analysis

- Filip Malmberg, Centre for Image Analysis

- Stefan Seipel, Centre for Image Analysis

- Pontus Olsson, Centre for Image Analysis

- Fredrik Nysjö, Centre for Image Analysis

- Johan Nysjö, Centre for Image Analysis

- Stefan Johansson, Dept. of Engineering Sciences (also PiezoMotor AB)

- Kristofer Gamstedt, Dept. of Engineering Sciences

- Håkan Lanshammar, Dept. of Information Technology

- Kjartan Halvorsen, Dept. of Information Technology

- Jan-Michael Hirsch, Dept. of Surgical Sciences, Oral and Maxillofacial Surgery

- Lars Mattsson, Industrial Metrology and Optics Group, Swedish Royal Institute of Technology (KTH)

- Jonny Gustafsson, Industrial Metrology and Optics Group, KTH (also HoloVision AB)

- Roland S Johansson, Dept. of Physiology, Umeå University (Consultant)

Alumni

- Martin Ericsson, Centre for Image Analysis

- Robin Strand, Centre for Image Analysis

- Jim Björk, MSc student, Uppsala University

- Gunnar Jansson (deceased in 2010, formerly at Dept. of Psychology, Uppsala University)

Steering Committee

The project steering committee, in addition to the project leader and assistant project leader, comprised Håkan Lanshammar, Head of the Department of Information Technology, Uppsala University; Lars Mattsson, Head of Industrial Metrology and Optics, KTH; Stefan Johansson, PiezoMotor AB and Department of Engineering Sciences, Uppsala University; and Jan-Michael Hirsch, Department of Surgical Sciences, Oral and Maxillofacial Surgery, Uppsala University.

Industrial Collaborators

PiezoMotor AB (Stefan Johansson)

SenseGraphics AB (Johan Mattsson Beskow, Daniel Evestedt, Tommy Forsell)

Imagination Studios AB (John Klepper, Christian Sjöström)

Period

Project started in 2009 and ended in 2012.

Related Projects

Feeling is believing

Visualization and haptics for interactive medical image analysis

Orbit segmentation for cranio-maxillofacial surgery planning

Funding Agencies

The Visualization Program by Knowledge Foundation, VINNOVA, SSF, ISA, and Vårdalsstiftelsen.