Stina Svensson

Funding: SLU S-faculty, STINT

Period: 0210-

Partners: Pieter Jonker, Pattern Recognition Section, Faculty of Applied Physics, Delft University of Technology, Delft, The Netherlands

Abstract: In medical applications, devices giving three dimensional (3D) images are in use since the sixties for imaging anatomical or functional aspects of the human body. It is also possible to use images with more than three dimensions. Four dimensional (4D) images can be used to represent a 3D object traversing in space-time, e.g., a beating heart or a moving robot. During 2002 we have started to work with this kind of images and, more specifically, to analyse the shape of the objects in such images. As 4D images contain huge amounts of data, we first need to find a suitable representation with reduced amount of data, still containing enough information to actually perform shape analysis. This can be achieved using skeletonization algorithms (see Project 39 - skeletonization of volume images). These create a compact representation of a complex object that can be used, for instance, in the visualization and quantitative analysis of spatial processes in living cells and tissues.

The first, and on-going, step is to develop methods for identifying hyxels (hyper volume picture elements) that can be removed without altering the topology of the original object. This is necessary to have a topology preserving skeletonization algorithm.

The work within this project was facilitated by a two months visit for Stina Svensson in the Pattern Recognition Group in Delft, The Netherlands (possibly by a grant from STINT - The Swedish Foundation for International Cooperation in Research and Higher Education).

Ingela Nyström, Gunilla Borgefors

Funding: UU TN-faculty, SLU S-faculty

Period: 0209-

Partners: Gabriella Sanniti di Baja, Istituto di Cibernetica, CNR, Pozzuoli, Italy

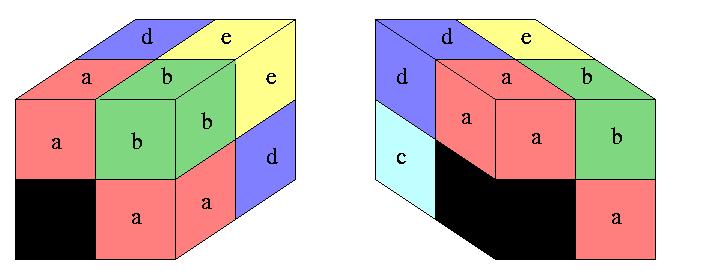

Abstract: We compute discrete convex hulls in 2D grey-level images, where we interpret grey-level values as heights in 3D landscapes. For these 3D objects, we compute approximations of their convex hull using a 3D binary method. Differently from other grey-level convex hull algorithms, producing results convex only in the geometric sense, our convex hull is convex also in the grey-level sense, which implies that uneven illumination can be eliminated. See Figure 13.

|

|

|

| (a) Bruce | (b) 3D Bruce | (c) 3D convex hull |

|

|

|

| (d) Grey-level convex hull | (e) Grey-level concavities | (f) 3D concavities |

Stina Svensson, Ida-Maria Sintorn

Funding: SLU S-faculty; CNR Italy

Period: 9801-

Partners: Gabriella Sanniti di Baja, Istituto di Cibernetica, CNR, Pozzuoli, Italy

Abstract: Object, or shape, representation is an essential part of image analysis, especially in object recognition. One way of representing an object is to use decomposition into significant parts. Object recognition is thereby a hierarchical process where each part is analysed and recognised individually. Object parts can be obtained in different ways. We use the distance transform of the object and identify therein suitable ``seeds'' corresponding to the regions into which the object will be decomposed. Starting from the seeds, the object components are obtained by a region growing process. This originates a decomposition into nearly convex parts and elongated parts (i.e., necks and protrusions). During 2002, this resulted in an article published in Image and Vision Computing.

Recently, we have started to use the method for various applications and by that investigated what modifications and simplifications that can be done for the method in order to optimise it for the specific application.

When analysing the shape of an object it is not only of interest to study the object itself but also its complement. This can give us information on, for example, the structure of tunnels possibly existing in the object. We start the analysis by identifying the convex deficiency of the object, i.e., the difference between the convex hull of the object and the object. The convex deficiency can then be decomposed into regions corresponding to cavities or tunnels in the object. The structure of the tunnels can be further analysed with respect to branching, thickness, and length. The research regarding the analysis of the convex deficiency was initiated during 2002 and facilitated by a two months visit for Stina Svensson in Napoli during the summer of 2002 (possible by an Italian Institute of Culture ``C. M. Lerici'' Foundations grant).

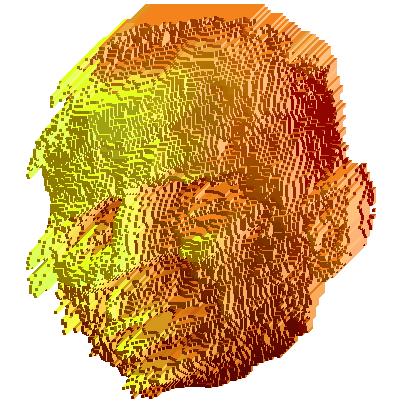

Recently, we have started to use the method for various applications and by that investigated what modifications and simplifications that can be done for the method in order to optimise it for the specific application. An example of a synthetic object with shape suitable to be decomposed by our method is shown in Figure 14.

Figure 14: Decomposed synthetic object, with parts shown using different grey-levels, resembling an object from an application where decomposition is a suitable shape representation scheme.

Gunilla Borgefors, Ida-Maria Sintorn, Stina Svensson

Funding: SLU S-faculty

Period: 9309-

Abstract: In a distance transform (DT), each picture element in an object is labelled with the distance to the closest element in the background. Thus the shape of the object is ``structured" in a useful way. Only local operations are used, even if the results are global distances. DTs are very useful tools in many types of image analysis, from simple noise removal to advanced shape recognition. We have investigated DTs since the early 1980's.

Weighted DTs in 3D have previously been investigated, using information from a 3×3×3 neighbourhood of each voxel. Now research is concentrated on 5×5×5 neighbourhoods, where the complexity of the digital geometry poses a real challenge. In a 5×5×5 neighbourhood, there exists six local distances, denoted a to f in the order of increasing Euclidean distance, compared to three local distances (a, b, c) in a 3×3×3 neighbourhood. We have performed a study of optimal local distances using from one to six of the local distances, all from the 5×5×5 neighbourhood. This resulted in an article published in Computer Vision and Image Understanding in 2002.

More and more applications are moving towards 4D imagery (e.g., a sequence of volume images or a grey-level 3D image seen as a 4D ``landscape''). Optimal DTs for weighted DTs in 3×3×3×3 have been computed. The results will be published in ``Discrete Applied Mathematics'' in 2003.

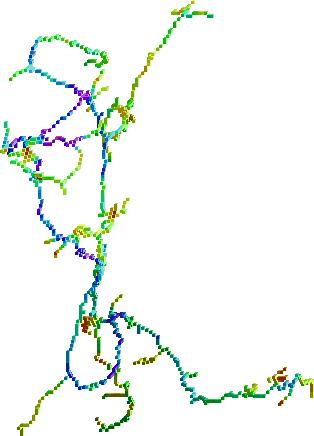

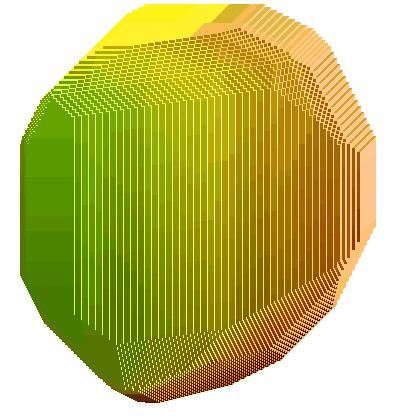

In medical and industrial volume images, the picture elements are often rectangular boxes rather than cubes, because the images are created as a stack of ``slices.'' It would be preferable to work directly in such grids, rather than interpolating the image to a cubic grid. However, DT based methods (among others) are not directly applicable to non-cubic grids. Therefore, we have investigated DTs in such elongated grids with voxels of size 1×1×L , i.e., two sides are equal (to one) and the third is larger L>1. The neighbourhood used is shown in Figure 15. The optimisation in 3D gives rise to four types of regular DTs of which one, the simplest, was further investigated. The results show that the error grows very rapidly with increasing L. The use of these DTs are therefore only recommended when either L is small or only relative distances are needed and rotation invariance is not important. This work was presented at the 10th International Conference in Discrete Geometry for Computerised Imagery 2002, and will shortly appear in a ``Pattern Recognition Letters'' special issue from the conference.

Figure 15: Local distances for 3×3×3 WDTs in rectangular grids. (Two views of the same neighbourhood.)

Gunilla Borgefors, Stina Svensson

Funding: SLU S-faculty

Period: 0110-

Abstract: In most applications, the input data is a grey-level image. The segmentation process, i.e., the process to separate the objects from the background, is often difficult. Thus, it is of interest to work directly with the grey-level images. We have recently started extend some bi-level methods we have developed to deal with objects in grey-level images, called grey-level objects.

One simple type of grey-level objects is where the segmentation is rather easy, except for the elements placed in the border of the object. This is the case for the 3D images of fibres in paper (see Project 25) we are using, where the border of the fibre wall is fuzzy. Assigning the voxels in the border to belong either the object or to the background in a strict way will give an analysis of the object which is noise sensitive. It is possible to decide in what range of grey-levels the border is placed. The result of a bi-level skeletonization algorithm would be completely different if all voxels in the range would be assigned to the object compared to if all would be assigned to the background. Instead, the values can be used to describe to what degree the voxels belong to the object and algorithms can be developed taking this into account. By this, we can have a more stable analysis of the object. During 2002, two papers was published on this topic. The fuzzy border distance transform was introduced for 2D images at International Conference on Pattern Recognition (ICPR) 2002 and published in the proceedings for the conference. A skeletonization algorithm developed for the ``regular'' distance transform was modified to be guided by fuzzy border distance transforms. The algorithm was given as an example in the paper. For 3D image s, the fuzzy border distance transform was introduced and, again, exemplified by a skeletonization algorithm at Swedish Symposium on Image Analysis 2002.

Ingela Nyström, Stina Svensson, Gunilla Borgefors

Funding: UU TN-faculty, SLU S-faculty

Period: 9501-

Partners: Gabriella Sanniti di Baja, Istituto di Cibernetica, CNR, Pozzuoli, Italy; Pieter Jonker, Pattern Recognition Section, Faculty of Applied Physics, Delft University of Technology, Delft, The Netherlands

Abstract: Skeletonization (or thinning) denotes the process where objects are reduced to structures of lower dimension. Skeletonization reduces objects in 2D images to a set of planar curves and objects in volume (3D) images to a set of 3D surfaces. In volume images, skeletonization might furthermore compress the skeleton to a set of 3D curves. Skeletonizing volume images is a promising approach for quantification and manipulation of volumetric shape, which is becoming more and more essential, e.g., in medical image analysis.

We follow the approach to first reduce an object to a surface skeleton and then to further reduce the surface skeleton to a curve skeleton. One method to reduce an object to a surface skeleton is based on iterative thinning of the distance transform of the object in a topology and shape preserving way. The skeletons produced fulfil the skeletal properties: they are topologically correct, centred within the object, thin, and fully reversible. The last property is rare for 3D skeletons. During 2002, an attempt of creating a general framework for different distance transforms was published in a proceedings book from an international workshop held in December 2000.

During the Autumn, we started to study the relation between using a mathematical morphology framework and using a distance transform based framework to reducing an object to a surface skeleton. This work is done in cooperation with Pieter Jonker and was initiated while Stina Svensson visited Pattern Recognition Group in Delft, The Netherlands, for two months.

To reduce the surface skeleton to a curve skeleton, we have developed a method based on the detection of curves and of junctions between surfaces in the surface skeleton. The surface skeleton is iteratively thinned while keeping voxels placed in curves and in (some of the) junctions and voxels necessary for topology preservation. The algorithm can be applied even if the surface skeletons are two-voxel thick (at parts), which is often the case. This is generally not true for other algorithms. The curve skeletonization algorithm was during 2002 published as an article in Pattern Recognition Letters.

Ingela Nyström, Stina Svensson

Funding: SLU S-faculty, UU TN-faculty

Period: 0109-

Partners: Gabriella Sanniti di Baja and Carlo Arcelli, Istituto di Cibernetica, CNR, Pozzuoli, Italy

Abstract: In most applications, the input data is a grey-level image. The segmentation process, i.e., the process to separate the objects from the background, is often difficult. Thus, it is of interest to work directly with the grey-level images. We have recently started to extend our bi-level skeletonization algorithms to deal with objects in grey-level images, called grey-level objects.

For grey-level objects, we can think of a number of different situations. One is where it is reasonable to assume that the most important regions of the grey-level object consist of the voxels with the highest grey-level. This can be the case for magnetic resonance angiography, imaging the blood flow in the vessels. The distribution of grey-levels in the vessel may not be symmetric, i.e., the ``centre-of-mass'' is not centrally located. By using a representation scheme that is adjusted to the regions where voxels with the highest grey-levels are placed, a more reliable analysis can be obtained than if only the distance from the border of the object is considered. It also implies that the segmentation process will not be equally crucial. A region of interest selection including the blood vessel together with a decision on the highest grey-level in the background that can exist is needed. A first study shows good results for skeletonization of 3D grey-level objects. We presented the above described approach for skeletonization of 3D grey-level objects at International Conference on Pattern Recognition (ICPR) 2002 and it was published in the proceedings for the conference. An example of a blood vessel and the resulting grey-medial surface representation can be found in Figure 16. The original image is a fuzzy segmented magnetic resonance angiography image. The grey-medial surface representation is indeed a surface representation, but in the case of the blood vessel, depending on the elongated shape of the vessel, the representation is close to a curve everywhere.

Figure 16: Blood vessel and its grey-medial surface representation. [By courtesy of Dr. Punam K. Saha, MIPG, Dept. of Radiology, University of Pennsylvania, Philadelphia.]

Stina Svensson

Funding: SLU S-faculty

Period: 0201-

Partners: Gabriella Sanniti di Baja and Carlo Arcelli, Istituto di Cibernetica, CNR, Pozzuoli, Italy; David Coeurjolly, Laboratoire LIRIS, Université Lumière Lyon 2, Lyon, France

Abstract: Elongated objects in 3D images can be represented by curves. See Project 25, where a curve representation is used for the fibres. For this reason it is of interest to develop tools to analyse curves in 3D images.

The distance from the end-points of the curve can be propagated over the curve. This can be used to distinguish important branches from non-important ones and thereby find the most significant branches. This topic has been extensively investigated for 2D line patterns, but so far not much has been done for 3D images.

More than the distances between various parts of the curve, the curvature along the curve is of interest. For each voxel in a curve, we can compute the curvature in that specific voxel. David Coeurjolly has developed a purely discrete curvature estimator that can be computed in linear time. We have studied the performance of this and used it in the analysis of the fibres in 3D images of paper (Project 25). A small, preliminary study on synthetic data was presented at Swedish Symposium on Image Analysis 2002.

Anders Hast, Ewert Bengtsson

Funding: Dept. of Mathematics, Natural Sciences, and Computing, University College of Gävle; The KK-foundation

Period: 9911-

Partner: Tony Barrera, Cycore AB, Uppsala

Abstract: Computer graphics is increasingly being used to create realistic images of 3D objects. Typical applications are in entertainment (animated films, games), commerce (showing 3D images of products on the web which can be manipulated and rotated), industrial design, and medicine. For the images to look realistic high quality shading and surface texture and topology rendering is necessary. Many fundamental algorithms in this field were developed already in the early seventies. The algorithms that produce the best results are computationally quite demanding (e.g., Phong shading) while other produce less satisfactory results (e.g., Gouraud shading). In order to make full 3D animation on standard computers feasible high efficiency is necessary. We are in this project re-examining those algorithms and are findingnew mathematical ways of simplifying the expressions and increasing the implementation speeds without sacrificing image quality. See Figure 17. The project is carried out in close collaboration with Tony Barrera at Cycore AB. In May 2002, Hast presented his licentiate thesis based on this work. See Section 4.2. Additionally, one journal and three conference papers were submitted as well as five chapters for a book on graphics algorithms.

Figure 17: The shadowed area is not set to ambient light, as usually is the case, which would make it flat and dull. Instead, a new modified Phong-Blinn model is used where the underlying geometry is clearly visible.

Fredrik Bergholm

Funding: SSF; UU TN-faculty

Period: 0108-

Partners: Jens Arnspang, Knud Henriksen, Dept. of Computer Science (DIKU), University of Copenhagen, Denmark

Abstract: Plenoscope is an optical invention (SE00/00004) for which we, the inventors, currently pursue patent applications in Europe, America, and Japan, and Swedish patent has been granted. The interesting aspect about plenoscopes, which -- crudely -- may be described as a lens system equipped with obstacles in the vicinity of the focal plane of the ocular, is that an ordinary camera, video camera, microscope etc. may be converted to a kind of 3D camera by placing plenoscope in front of the conventional lens system. Thereby, a photographic image with 3D-dependent distortions is obtained. Using image analysis, these distortions may, in principle, be translated into a depth map + ordinary photographic image, alternatively, into a (real time) 3D visualisation. In 2001-02, a theoretical frame-work for describing the image formation in plenoscopes was established. In a short proceedings article (SSAB02), some useful formulas are briefly described. The obstacles in plenoscopes, have been modified in Autumn 2002, to obtain more precision. A typical obstacle plate contains thousands of elements. Obstacles have been tested in microscopes.

Bo Nordin, Ewert Bengtsson

Funding: UU TN-faculty

Period: 8807-

Partners: Diascan AB, Uppsala; Wallac Oy, Åbo, Finland

Abstract: In recognition of the need in image analysis research to have a good platform for interactive work with digital images, we several years ago started a project with the aim of developing such a platform. The project originally involved some 10 man years of work, which would have been impossible to finance by regular research money. But through a cooperation with a group of companies we co-ordinated our interests of obtaining a good software platform for research with their interest in development of a new software product. Unfortunately, the companies never actively turned the resulting system, which was given the name IMP, into a product. At CBA, however, the IMP system has been used as a software basis for most of the teaching and research in image analysis for the last decade.

Some years ago, we started a major revision of the system as a ``background task'' for Nordin. The main goal was to re-program the core system in C++ to make it easier to maintain and extend. In 2002, we decided to write a completely new program platform, pixy, based on the new C++ core and with all image analysis functions written in C++ in order to take advantage of the C++-specific language constructs (classes, inheritance, polymorphism, templates, etc.) to enhance the programmer's API and make the code more reusable.

In pixy, it is easy to add plug-in modules with new functionality and new classes: several such modules have been implemented: MUSE (multivariate segmentation) and filter editors for editing filters in the spatial domain as well as in the Fourierdomain. A first release of pixy is planned for the summer of 2003.