Ingrid Carlbom, Ewert Bengtsson, Filip Malmberg, Ingela Nyström, Stefan Seipel, Pontus Olsson, Fredrik Nysjö

Partners: Stefan Johansson (Division of Microsystems Technology, Uppsala University and Tekno-vest AB); Jonny Gustafsson and Lars Mattson, Industrial Metrology and Optics Group, KTH; Jan-Michaél Hirsch, Dept. of Surgical Sciences, Oral & Maxillofacial Surgery, at UU and Consultant at Dept. of Plastic- and Maxillofacial Surgery, UU Hospital; Håkan Lanshammar and Kjartan Halvorsen, Dept. of Information Technology, UU; Roland Johansson, Dept. of Neurophysiology, Umeå University; PiezoMotors AB; SenseGraphics AB

Funding: Knowledge Foundation (KK Stiftelsen) 5250000 SEK

Period: 090810-120810

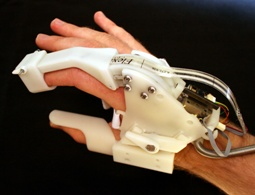

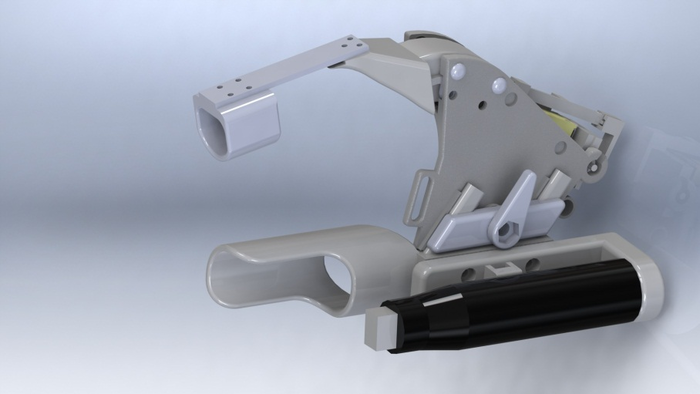

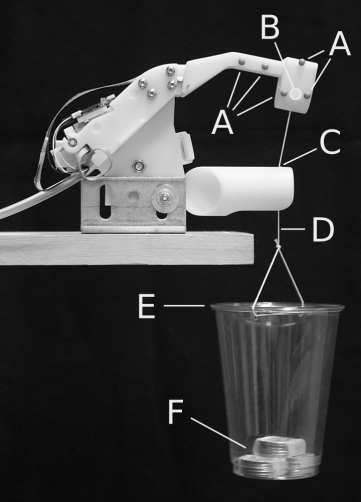

Abstract: Hardware Developments. In the last eight months of this project, we built generations three and four of the haptics hardware. In the third generation, the force sensor was replaced with a sensor using force sensitive ink printed on a flexible polymer carrier (Figure 2). The force sensor was placed close to the fingertip to reduce the effects from mechanical friction and elastic deformation. To reduce play in the joints and plastic deformation of the more critical mechanical details, some parts were redesigned in steel and aluminum. The evaluation of the third generation clearly indicates that the desired haptic perception could be reached with this solution, with most artifacts attributable to the flexible force sensor. This was later verified in the fourth and last version where a high quality strain gauge force sensor replaced the force sensitive ink on the polymer carrier. In this last design (Figure 2) the resilience between end-effector and force cell is negligible and most previously observed artifacts are remedied.

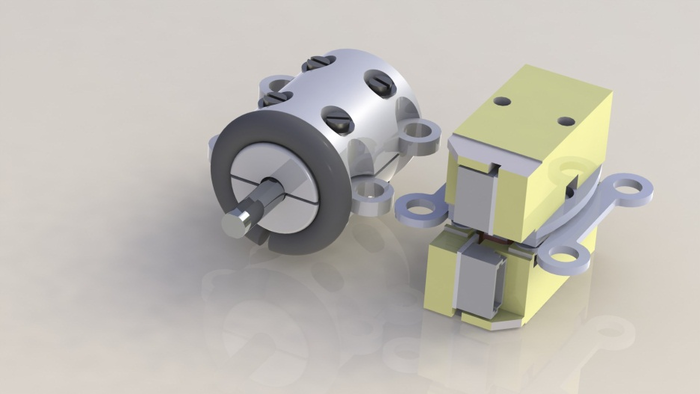

The two main remaining issues with the glove are audible noise and speed. The motor used in the glove prototypes is a standard component for high precision movements and the maximum speed does not allow for very fast finger movements. A new ultrasonic motor, developed for this project (Figure 3), was evaluated and tested experimentally. The motor was designed to operate in the inaudible ultrasonic frequency and the maximum speed was experimentally verified to be 160 mm/s at no load. This force level in combination with the high speed makes it well suited for linear direct drive (no leverage) of a glove joint.

In summary, the prototype admittance-type haptic glove with a compact integrated piezoelectric motor can produce both accurate force-displacement responses of non-linear elastic material stiffness and a fast and stable response to an applied load.

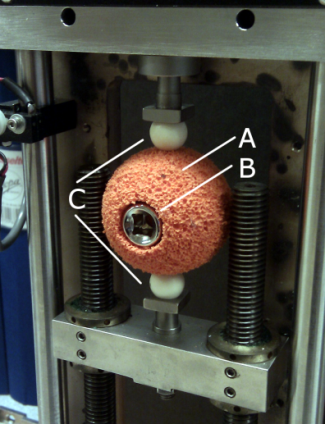

Software Developments. Stiffness, the relationship between load and deflection, is of interest in haptic rendering because it is a mechanical property that we use to distinguish one object from another, and to guide us how an object should be handled. While an ideal spring obeys a linear relationship between load and deflection, many load-deflection curves of real objects are non-linear. To test the proposed glove's ability to render stiffness, we loaded the controller with a series of load-deflection curves with measurements from real, physical objects, and measured how closely the glove can follow these curves by measuring the actual position of the index finger thimble while applying a series of known loads. We generated the sampled load/deflection values during compression of several balls using a Minimat 2000 compression and tensile testing machine (Figure 4). In addition, for two of the sampled materials we also measured how fast the thimble reaches the target deflections corresponding to a defined load applied at the thimble (Figure 4).

We showed that the glove can realistically display load-deflection curves obtained from physical samples, and how linear compliance compensation in the control loop can further improve the accuracy. The dynamic response after a load is applied to the thimble is a fast and stable transition to the new target position without overshoot or oscillation.

|

Snap-to-fit. Virtual assembly of complex objects has application in domains ranging from surgery planning to archaeology. In these domains the objective is to plan the restoration of skeletal anatomy or archaeological artifacts and to achieve an optimal reconstruction without causing further damage. Haptics can improve an assembly task by giving feedback when objects collide, but precise fitting of fractured objects guided by delicate haptic cues similar to those present in the physical world requires haptic display transparency beyond the performance of today's systems. We developed a haptic alignment tool that combines a 6 Degrees-of-Freedom (DOF) attraction force with traditional 6 DOF contact forces to pull a virtual object towards a local stable fit with a fixed object. Hierarchical data structures and pre-computation combine to achieve haptic rates for fractured surfaces with over 5000 points. We demonstrated the use of our system on applications from both cranio-maxillofacial surgery and archaeology. (See Project 2.)

Ingrid Carlbom, Pontus Olsson, Fredrik Nysjö

Partner: Jan-Michaél Hirsch, Dept. of Surgical Sciences, Oral & Maxillofacial Surgery, at UU and Consultant at Dept. of Plastic- and Maxillofacial Surgery, UU Hospital

Funding: See Project 1, Whole Hand Haptics with True 3D Display

Period: 090810-120810

Abstract: Cranio-maxillofacial surgery to restore normal skeletal anatomy in patients with serious trauma to the face can be very complex and time consuming. But it is generally accepted that careful pre-operative planning will lead to a better outcome with a higher degree of function and reduced morbidity and also reduced time in the operating room. However, today's surgery planning systems are primitive, relying mostly on the user's ability to plan complex tasks using a two-dimensional graphical interface.

We developed a system for planning the restoration of skeletal anatomy in facial trauma patients which combines stereo visualization with six degrees-of-freedom, high-fidelity haptic feedback to enable analysis, planning, and preoperative testing of alternative solutions for restoring bone fragments to their proper positions in a virtual model derived from patient-specific CT data. The stereo display gives the surgeon accurate visual spatial perception and the haptics system guides the surgeon by providing intuitive contact forces when bone fragments are in contact as well as six degrees-of-freedom attraction forces (Snap-to-fit) that can be used to find precise bone fragment alignment.

A senior surgeon with no prior experience of the system received 45 minutes of training on the system. Following that he completed a virtual reconstruction in 22 minutes of a complex mandible fracture with a reduced and well adapted result.

Filip Malmberg, Ingela Nyström, Ewert Bengtsson, Stefan Seipel

Funding: TN-faculty, UU

Period: 0301-

Abstract: Modern medical imaging techniques provide 3D images of increasing complexity. Better ways of exploring these images for diagnostic and treatment planning purposes are needed. Combined stereoscopic and haptic display of the images form a powerful platform for such image analysis. In order to work with specific patient cases, it is necessary to be able to work directly with the medical image volume and to generate the relevant 3D structures as they are needed for the visualization. Most work so far on haptic display use predefined object surface models. In this project, we are creating the tools necessary for effective interactive exploration of complex medical image volumes for diagnostic or treatment planning purposes through combined use of haptic and 3D stereoscopic display techniques. The developed methods are tested on real medical application data. Our current applications are described further in projects 7 and 8.

A software package for interactive visualization and segmentation developed within this project has been released under an open-source license. The package, called WISH, is available for download at http://www.cb.uu.se/research/haptics.

Filip Malmberg, Ingela Nyström, Ewert Bengtsson

Funding: TN-faculty, UU

Period: 0901-

Abstract: Image segmentation, the process of identifying and separating relevant objects and structures in an image, is a fundamental problem in image analysis. Accurate segmentation of objects of interest is often required before further processing and analysis can be performed. Despite years of active research, fully automatic segmentation of arbitrary images remains an unsolved problem.

Interactive segmentation methods use human expert knowledge as additional input, thereby making the segmentation problem more tractable. A successful semi-automatic method minimizes the required user interaction time, while maintaining tight user control to guarantee the correctness of the result. The input from the user is typically given in one of two forms:

- Boundary constraints

The user is asked to provide pieces of the desired segmentation boundary. - Regional constraints

The user is asked to provide a partial labeling of the image elements (e.g., marking a small number of image elements as ``object'' or ``background'').

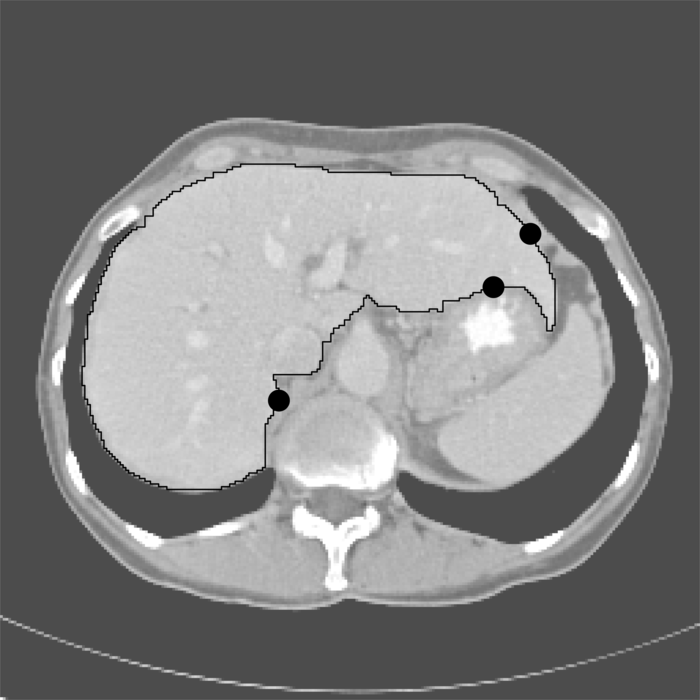

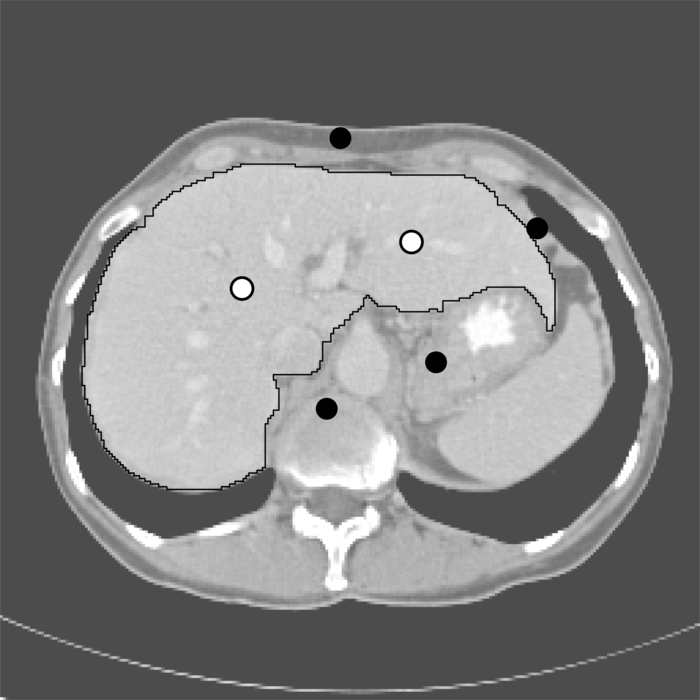

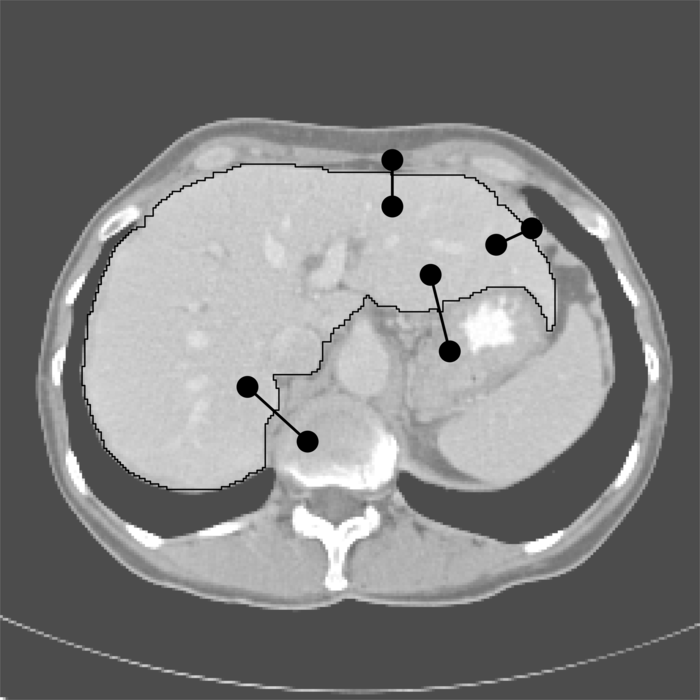

In a recent publication, we showed that these two types of input can be seen as special cases of what we refer to as generalized hard constraints. This concept is illustrated in Figure 5. An important consequence of this result is that it facilitates the development of general-purpose methods for interactive segmentation, that are not restricted to a particular paradigm for user input.

In 2012, results from this project were presented at the International Conference on Pattern Recognition (ICPR) in Tsukuba, Japan.

a |

b |

c |

Filip Malmberg, Robin Strand, Ingela Nyström, Ewert Bengtsson

Partners: Joel Kullberg, Håkan Ahlström, Dept. of Radiology, Oncology and Radiation Science, UU

Funding: TN-faculty, UU

Period: 1106-

Abstract: Three-dimensional imaging technique such as computed tomography (CT) and magnetic resonance imaging (MRI) are now routinely used in medicine. This has led to an ever increasing flow of high-resolution, high-dimensional, image data that needs to be qualitatively and quantitatively analyzed. Typically, this analysis requires accurate segmentation of the image.

At CBA, we have been developing powerful new methods for interactive image segmentation (see Project 4). In this project, We seek to employ these methods for segmentation of medical images, in collaboration with the Department of Radiology, Oncology and Radiation Science (ROS) at the Uppsala University Hospital.

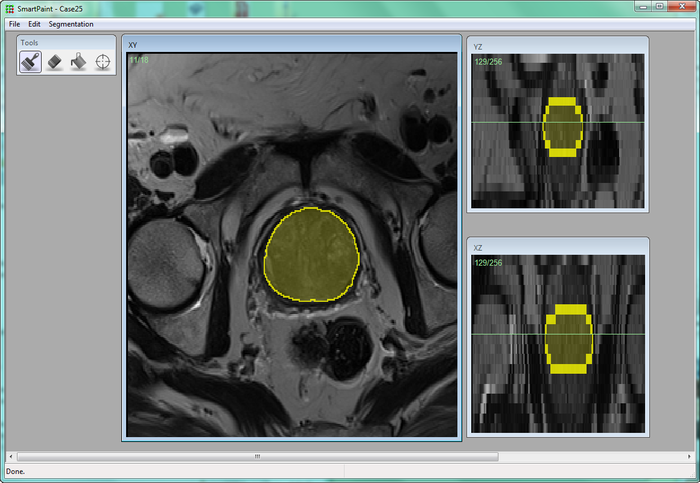

During 2012, a new software for interactive segmentation called Smartpaint was developed within this project, see Figure 6. We used this software to participate in a MICCAI challenge on prostate segmentation (PROMISE12), and made 3rd place.

|

Lennart Svensson, Ida-Maria Sintorn, Johan Nysjö, Ingela Nyström, Anders Brun, Gunilla Borgefors

Partners: Dept. of Cell and Molecular Biology, Karolinska Institute; SenseGraphics AB

Funding: The Visualization Program by Knowledge Foundation; Vaardal Foundation; Foundation for Strategic Research; VINNOVA; Invest in Sweden Agency

Period: 0807-

Abstract: Electron tomography is the only microscopy technique that allows 3-D imaging of biological samples at nano-meter resolution. It thus enables studies of both the dynamics of proteins and individual macromolecular structures in tissue. However, the electron tomography images have a low signal-to-noise ratio, which make image analysis methods an important tool in interpreting the images. The ProViz project aims at developing visualization and analysis methods in this area.

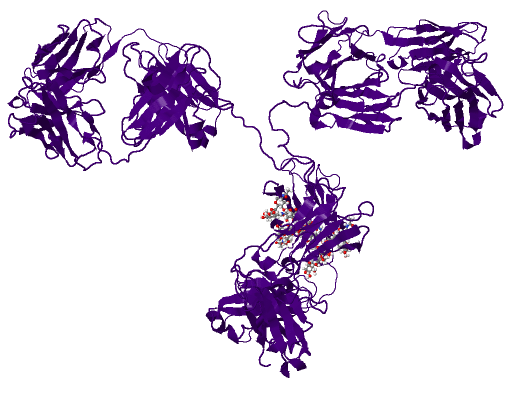

During 2012 we performed an investigation of GPU implementation techniques for registration in these images. The results of this were presented at the International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, VISIGRAPP 2012. With a specialized implementation in CUDA we achieved speed increases by an order of a magnitude compared to a parallel CPU implementation. During the year we have also investigated a new way of creating registration templates for finding proteins in these images (Figure 7), as well as continued to work on the ProViz software package, which will make the methods developed in the project easily accessible for other researchers.

[PDB structure] |

[PDB template] |

[New template] |

Filip Malmberg, Ewert Bengtsson, Ingela Nyström, Johan Nysjö

Partners: Jan Michael Hirsch, Elias Messo, Babett Williger, Andreas Thor, Dept. of Surgical Sciences, UU Hospital

Funding: TN-faculty, UU; NovaMedTech

Period: 0912-

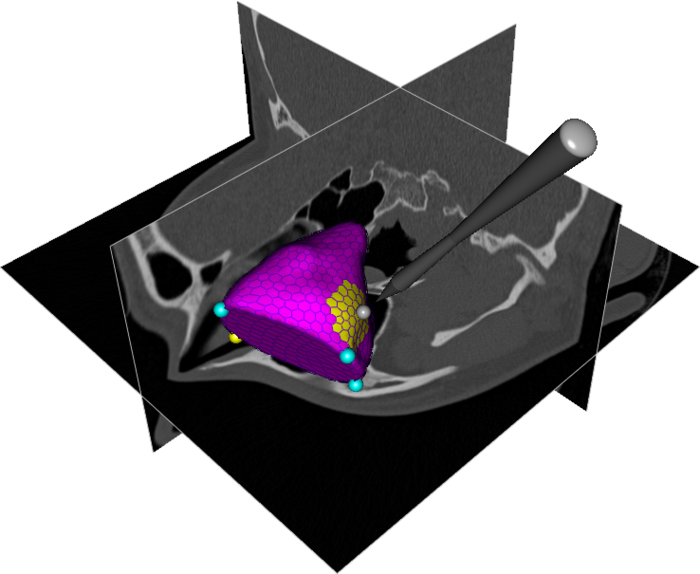

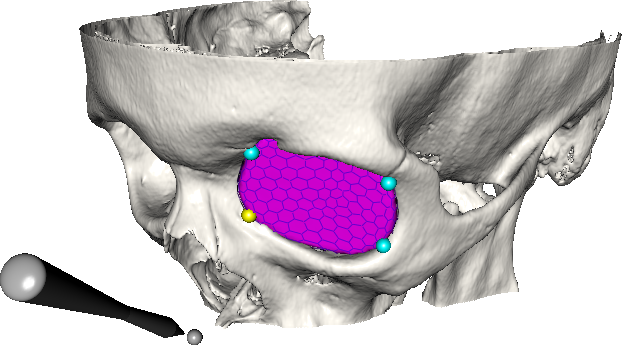

Abstract: A central problem in cranio-maxillofacial (CMF) surgery is to restore the normal anatomy of the skeleton after defects, i.e., malformations, tumors and trauma to the face. This is particularly difficult when a fracture causes vital anatomical structures such as the bone segments to be displaced significantly from their proper position, when bone segments are missing, or when a bone segment is located in such a position that any attempt to restore it into its original position poses considerable risk for causing further damage to vital anatomical structures such as the eye or the central nervous system. There is ample evidence that careful pre-operative planning can significantly improve the precision and predictability and reduce morbidity of the craniofacial reconstruction. In addition, time in the operating room can be reduced. An important component in surgery planning is to be able to accurately measure the extent of certain anatomical structures. Of particular interest in CMF surgery are the shape and volume of the orbits (eye sockets) comparing the left side with the right side. These properties can be measured in CT images of the skull, but this requires accurate segmentation of the orbits. Today, segmentation is usually performed by manual tracing of the orbit in a large number of slices of the CT image. This task is very time-consuming, and sensitive to operator errors. Semi-automatic segmentation methods could reduce the required operator time significantly. In this project, we are developing a prototype of a semi-automatic system for segmenting the orbit in CT images, see Figure 8.

During 2012, we have been investigating two additional applications for the system: volumetric measurements of the upper airway space in cone-beam CT (CBCT) images; and volumetric measurements of the maxillary sinuses in CT images.

|

Filip Malmberg, Johan Nysjö, Ingela Nyström, Ida-Maria Sintorn

Partners: Albert Christersson, Dept. of Orthopedics, UU Hospital

Funding: TN-faculty, UU

Period: 1111-

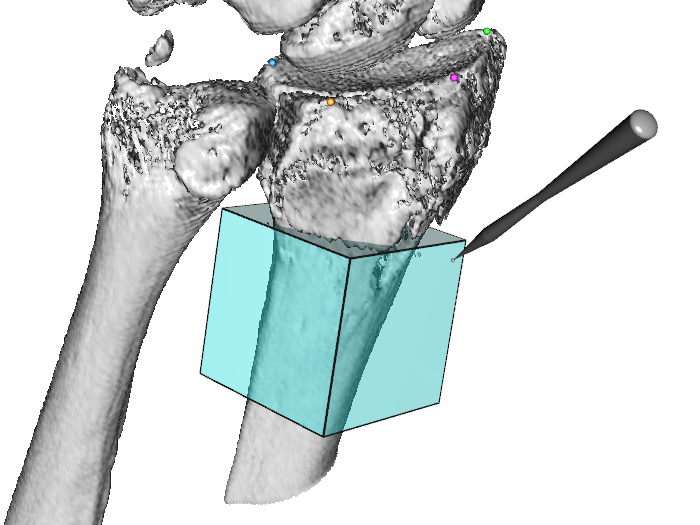

Abstract: To be able to decide the correct treatment of a fracture, for example, whether a fracture needs to be operated on or not, it is important to assess the details about the fracture. One of the most important factors is the fracture displacement, particularly the angulation of the fracture. When a fracture is located close to a joint, for example, in the wrist, which is the most common location for fractures in the human being, the angulation of the joint line in relation to the long axis of the long bone needs to be measured. Since the surface of the joint line in the wrist is highly irregular, and since it is difficult to take X-rays of the wrist in exactly the same projections from time to time, conventional X-ray is not an optimal method for this purpose. In most clinical cases, the conventional X-ray is satisfactory for making a correct decision about the treatment, but when comparing two different methods of treatment, for example, two different operation techniques, the accuracy of the angulation of the fractures before and after the treatment has to be higher.

In this project, we are developing a system for measuring these angles in 3D computed tomography (CT) images of the wrist. Our proposed system is semi-automatic; the user is required to guide the system by indicating the approximate position and orientation of various parts of the radius bone. This information is subsequently used as input to an automatic algorithm that makes precise angle measurements. To facilitate user interaction in 3D, we use a system that supports 3D input, stereo graphics, and haptic feedback.

A RANSAC-based method for estimating the long axis of the radius bone was presented at the International Conference on Computer Vision and Graphics (ICCVG 2012). We are currently developing methods and software for measuring the orientation of the joint surface of the radius. Some preliminary results of this work are shown in Figure 9.

|

Robin Strand

Partner: Joel Kullberg, Håkan Ahlström, Dept. of Radiology, Oncology and Radiation Science, UU

Funding: Faculty of Medicine, UU

Period: 1208-

Abstract: Magnetic Resonance Tomography (MR) is an image acquisition technique that does not use ionizing radiation and is very useful in clinical use and in medical research, e.g., for analyzing the composition of the human body. At the division of Radiology, Uppsala University, a huge amount of whole body-MR data is acquired for research on the connection between the composition of the human body and disease.

To compare these whole body volume images voxel by voxel, they should be aligned by an image registration process. To robustly register whole body-MR volumes, for example, segmented tissue (see, e.g., Project 5) and landmarks are utilized in a non-rigid registration process in this project.

Elisabeth Linnér, Robin Strand

Funding: TN-faculty, UU

Period: 1005-

Abstract: Three-dimensional images are widely used in, for example, health care. With optimal sampling lattices, the amount of data can be reduced by 30% without affecting the image quality. In this project, methods for image acquisition, analysis and visualization using optimal sampling lattices are studied and developed, with special focus on magnetic resonance imaging. The intention is that this project will lead to faster and better processing of images with less demands on data storage capacity.

A paper on normalized convolution on different two-dimensional grids was presented at 21th International Conference on Pattern Recognition (ICPR), Tsukuba, Japan.

Ewert Bengtsson, Anders Hast

Partner: Tony Barrera, Uppsala

Funding: TN-faculty, UU

Period: 9911-

Abstract: Computer graphics is increasingly being used to create realistic images of 3D objects for applications in entertainment, (animated films, games), commerce (showing 3D images of products on the web), industrial design and medicine. For the images to look realistic high quality shading and surface texture and topology rendering is necessary. A proper understanding of the mathematics behind the algorithms can make a big difference in rendering quality as well as speed. We have in this project over the years re-examined several of the established algorithms and found new mathematical ways of simplifying the expressions and increasing the implementation speeds without sacrificing image quality. We have also invented a number of completely new algorithms. The project is carried out in close collaboration with Tony Barrera, an autodidact mathematician. It has been running since 1999 and resulted in more than 25 international publications and a PhD thesis. During 2012 a number of new mathematical problems were investigated and one of the previously developed ones was finalized for submission to a conference.

Stefan Seipel, Fei Liu

Funding: University of Gävle; TN-faculty, UU

Period: 110801-

Abstract: Mobile devices have recently seen an enormous advancement in their computational power with many exciting and promising pieces of technology available at the same time such as mobile graphics processing, spatial positioning, and access to geo-spatial databases. This research project in ``ubiquitous visualization'' will deal with mobile visualization of spatial data in indoor and outdoor environments. It addresses several key problems for robust mobile visualization such as spatial tracking and calibration; image based 2D and 3D registration and efficient graphical representations in mobile user interfaces. Evaluation of developed methods or techniques, mainly with respect to the human factor, will be an integral part of these studies in order to endeavor the best user experience. Application scenarios studied in this project will predominantly, but not exclusively, be in the field of urban spaces and built environment.

Stefan Seipel

Partners: Joakim Widén, David Lingfors, Solid State Physics, Dept. of Engineering Sciences, UU

Funding: University of Gävle; TN-faculty, UU

Period: 1211-

Abstract: The assessment of solar energy yield in urban environments depends primarily on estimated solar irradiance and local topography or building architecture. To maintain manageable computational complexity, spatial and temporal detail is traditionally sacrificed in irradiation calculations. Often, coarse urban models (LIDAR data) and long-term time-averaged observations of solar irradiance/vegetation indexes/weather are used. An in-depth exploration of the solar yield on building facades would ideally account for very short term variations of solar exposure within given observation intervals. Those variations depend on many factors such as solar angle, local building geometry and nearby occluding building structures. Other variables into these calculations are monthly variation of vegetation and daily variation of weather conditions. Highly detailed analysis of solar energy yield requires numerical integration of the irradiance equations with dense sampling of all temporal and spatially varying variables. This approach allows for insights into the quality of solar energy yield in space over time. On the other hand it is computationally demanding which hampers interactive exploration. The main objective of this project is to develop and study new computational approaches to overcome these limitations and to allow for spatio-temporal visualization and exploration of solar energy yield in the build environment (Figure 10).

Next: Analysis of microscopic biomedical Up: Research Previous: Research Contents <