Ingrid Carlbom, Ewert Bengtsson, Filip Malmberg, Ingela Nyström, Stefan Seipel, Pontus Olsson, Fredrik Nysjö

Partners: Stefan Johansson, Material Science, Dept. of Engineering Science, UU; Jonny Gustafsson and Lars Mattson, Industrial Metrology and Optics Group, KTH; Jan-Michaél Hirsch, Dept. of Surgical Sciences, Oral & Maxillofacial Surgery, UU and Consultant at Dept. of Plastic- and Maxillofacial Surgery, UU Hospital; Håkan Lanshammar and Kjartan Halvorsen, Dept. of Information Technology, UU; Roland Johansson, Dept. of Neurophysiology, Umeå University; PiezoMotors AB, SenseGraphics AB

Funding: Knowledge Foundation (KK Stiftelsen)

Period: 090810-

Abstract: Our vision is a new interaction paradigm that gives the user an unprecedented experience to touch and manipulate high contrast, high resolution, three-dimensional (3D) virtual objects suspended in space, using a glove that gives such realistic haptic feedback that the interaction closely resembles interaction with real objects. The system has two main components: The first is a haptic system comprising a glove mounted on a robot arm that gives the user force feedback during manipulation of an object. The second component is a three-dimensional display based on a holographic optical element (HOE) that permits the user to interact with a virtual object by reaching into the object with the gloved hand.

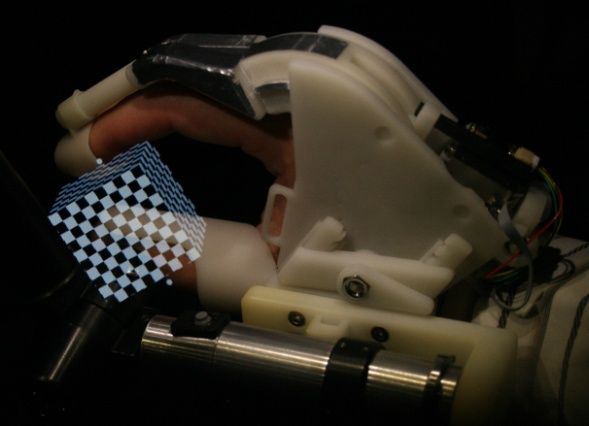

Haptics Hardware. After experiments with the first generation glove built in 2010, we built a slimmer and lighter exoskeleton and moved the force sensor in front of the linkage to make the glove more sensitive to movements in the distal parts of the fingers. The exoskeleton prototype has six degrees of freedom (DOF) movement of the hand and one DOF gripping with the thumb and index finger. The six DOF movements are accomplished with a commercial haptic arm, the SensAble Phantom Premium, while the gripping exoskeleton ``glove'' is developed within this project.

Haptics Software. One major goal of the Whole Hand Haptics Project is to allow the user to feel object stiffness. This is important to identify objects in the world around us, and it is particularly important in virtual surgery for manipulation of soft tissue. With this glove we are able to squeeze an object and feel different stiffness, something that has never to our knowledge been accomplished before with a compact glove! See Figure 2(a).

Another major goal of the Whole Hand Haptics project is to demonstrate that gripping an object with two (and later three) fingers allows object manipulation that is not feasible, or at least is very cumbersome, with one point of contact with an object. Using the haptic glove prototype, we have created software to simulate two finger interaction with a virtual object. The user may lift and manipulate the object in 3D, and a physics simulation which includes weight, inertia, and gravity adds to the realism (Figure 2(b)).

|

New Display Hardware. Since our goal is to develop haptics for cranio maxillo-facial surgery, we need a display system that allows relatively uncomplicated porting of WISH, our toolkit for interactive medical image analysis with volume visualization and haptics, from conventional workstations to a stereo display with co-located haptics. We chose a SenseGraphics Display 300, which is a desktop-sized stereo workstation with radio-frequency shutter glasses, a LCD-monitor, and a semi-transparent silvered mirror. Our tracking software and all our haptics now run on both the holographic display and the stereo display.

Perceptual Evaluation of Co-located and Non-co-located Haptics. We conducted a user study that investigates the pros and cons of physically co-located haptics on two different display types: the SenseGraphics half-transparent mirror 3D display and our prototype autostereoscopic display based on a Holographic Optical Element (HOE). We use two accuracy tasks with spatial accuracy as the dependent variable and one manipulation task with time as the dependent variable. The study shows that on both displays co-location significantly improves completion time in the manipulation task, while co-location does not improve the accuracy in the spatial accuracy tasks.

Improvements to the 3D Holographic Display. This year we remade the hologram assuming a smaller interocular distance. This change enables a correct viewing experience for a larger number of people since there is now little risk that the slit width (the width of the viewing zone of one projector) is greater than the interocular distance of an adult person. At the same time the display was fine tuned to minimize some of the slit transition artifacts.

Software System. The software for both the display and the haptics is based on the H3DAPI from SenseGraphics. We extended H3DAPI with a software library that provides calibration of the graphics and all the hardware components, including (1) projector calibration with key stone correction for the HOE display; (2) haptics Phantom device calibration to find the zero position of all its sensors, which required that we manufacture a hardware jig, in addition to software development; (3) for each display, calibration of the visual and the haptic work volumes; and (4) for each display, registration of the tracking and the visual work volumes.

Matching and calibrating the visual work volume and the haptic work volume is essential, in particular when using co-located haptics, since humans easily become aware of discrepancies between the visual and the haptic work volumes. We acquired the OptiTrack system from Natural Point, which is an IR optical tracker with built in motion capture and image processing. We integrated the tracker camera software with our version of the H3DAPI from SenseGraphics AB.

Filip Malmberg, Ingela Nyström, Ewert Bengtsson, Stefan Seipel

Partner: Gunnar Jansson1, Dept. of Psychology, UU

Funding: TN-faculty, UU

Period: 0301-

Abstract: Modern medical imaging techniques provide 3D images of increasing complexity. Better ways of exploring these images for diagnostic and treatment planning purposes are needed. Combined stereoscopic and haptic display of the images form a powerful platform for such image analysis. In order to work with specific patient cases, it is necessary to be able to work directly with the medical image volume and to generate the relevant 3D structures as they are needed for the visualization. Most work so far on haptic display use predefined object surface models. In this project, we are creating the tools necessary for effective interactive exploration of complex medical image volumes for diagnostic or treatment planning purposes through combined use of haptic and 3D stereoscopic display techniques. The developed methods are tested on real medical application data. Our current applications are described further in projects 6 and 10.

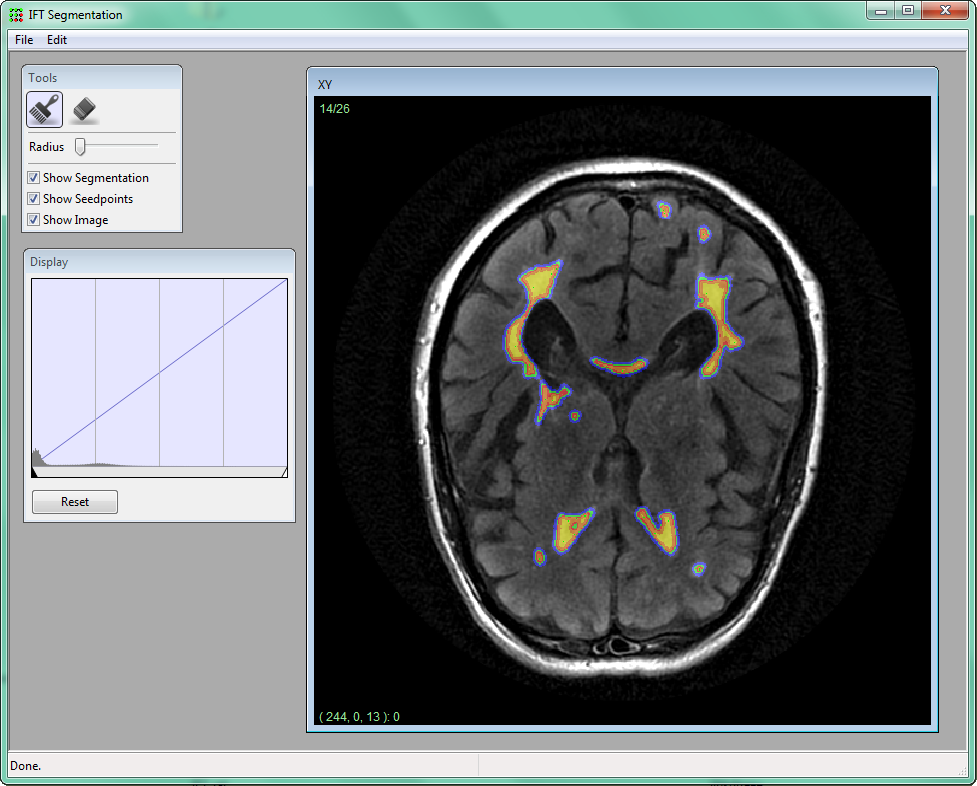

A software package for interactive visualization and segmentation developed within this project has been released under an open-source license. The package, called WISH, is available for download at http://www.cb.uu.se/research/haptics.

Filip Malmberg, Ingela Nyström, Ewert Bengtsson

Funding: TN-faculty, UU

Period: 0901-

Abstract: Image segmentation, the process of identifying and separating relevant objects and structures in an image, is a fundamental problem in image analysis. Accurate segmentation of objects of interest is often required before further processing and analysis can be performed. Despite years of active research, fully automatic segmentation of arbitrary images remains an unsolved problem.

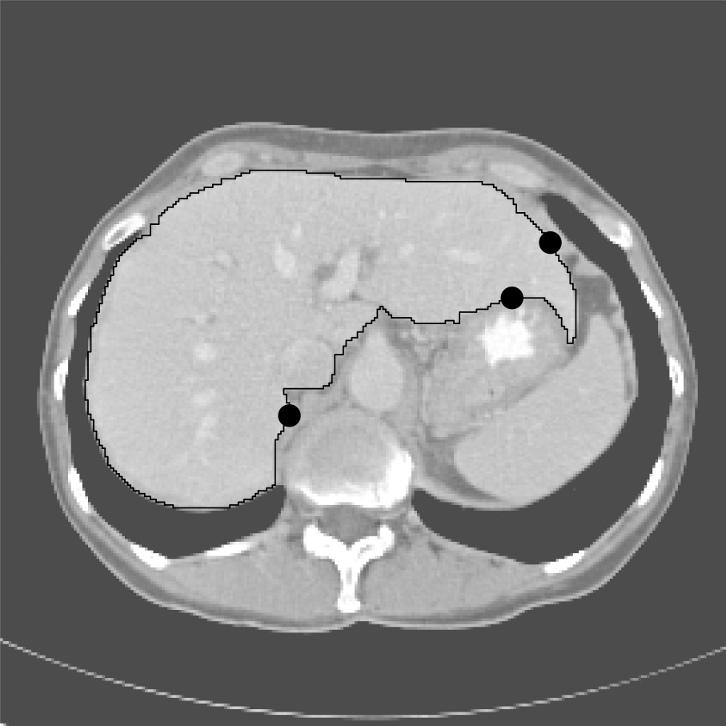

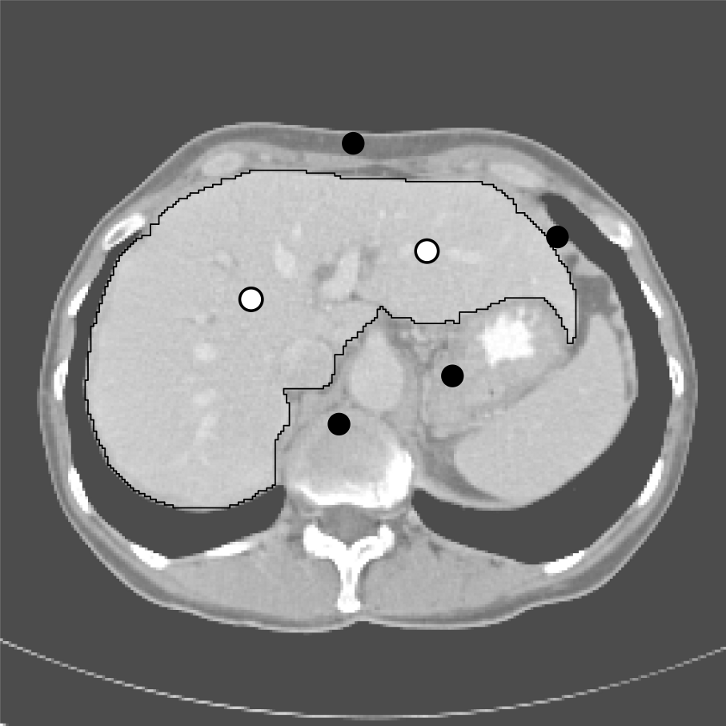

Interactive segmentation methods use human expert knowledge as additional input, thereby making the segmentation problem more tractable. A successful semi-automatic method minimizes the required user interaction time, while maintaining tight user control to guarantee the correctness of the result. The input from the user is typically given in one of two forms:

- Boundary constraints

The user is asked to provide pieces of the desired segmentation boundary. - Regional constraints

The user is asked to provide a partial labelling of the image elements (e.g., marking a small number of image elements as ``object'' or ``background'').

This project was presented as part of the PhD thesis by Filip Malmberg that was defended in May 2011. In 2011, two papers related to this project were presented at the Scandinavian Conference on Image Analysis (SCIA) in Ystad, Sweden.

|

Filip Malmberg, Robin Strand, Ingela Nyström, Ewert Bengtsson

Partners: Joel Kullberg, Håkan Ahlström, Dept. of Radiology, Oncology and Radiation Science, UU

Funding: TN-faculty, UU

Period: 1106-

Abstract: Three-dimensional imaging technique such as computed tomography (CT) and magnetic resonance imaging (MRI ) are now routinely used in medicine. This has lead to an ever increasing flow of high-resolution, high-dimensional, image data that needs to be qualitatively and quantitatively analyzed. Typically, this analysis requires accurate segmentation of the image.

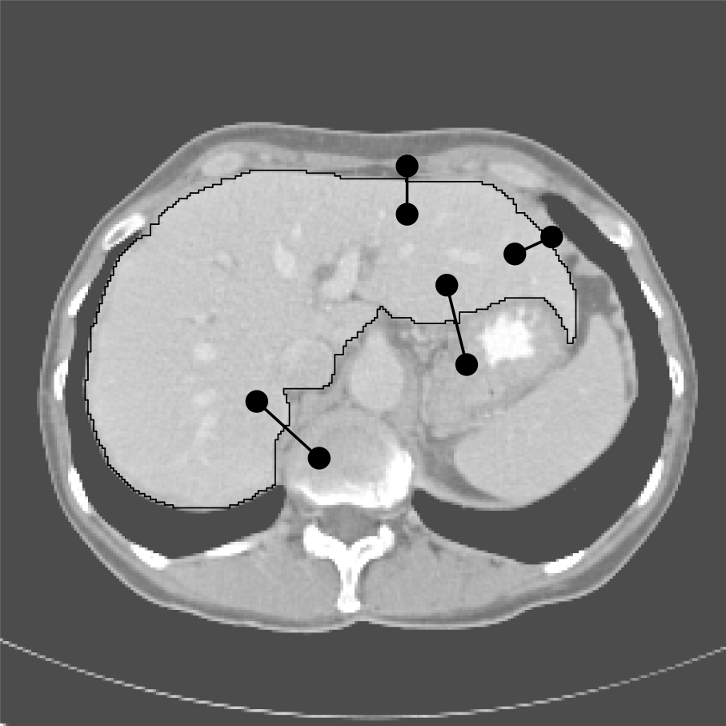

At CBA, we have been developing powerful new methods for interactive image segmentation (see Project 3). In this project, we seek to employ these methods for segmentation of medical images, in collaboration with the Dept. of Radiology, Oncology and Radiation Science (ROS) at the UU Hospital.

During 2011, the software and methods developed in this project have been used in two clinical studies at the hospital: One study on measuring white matter lesions in MR images of the human brain, and one study on quantifying adipose tissue in whole-body MR images of rats. Publications describing the results of these studies are underway.

|

Lennart Svensson, Ida-Maria Sintorn, Johan Nysjö, Stina Svensson, Ingela Nyström, Anders Brun, Gunilla Borgefors

Partners: Dept. of Cell and Molecular Biology, Karolinska Institute; SenseGraphics AB

Funding: The Visualization Program by Knowledge Foundation; Vaardal Foundation; Foundation for Strategic Research; VINNOVA; Invest in Sweden Agency

Period: 0807-

Abstract: The traditional methods for solving the structure of proteins are X-ray crystallography and NMR spectroscopy. An alternative approach, Molecular Electron Tomography (MET), has more recently gained interest within the field of structural biology as it enables studies of both the dynamics of proteins and individual macromolecular structures in tissue. However, MET results in images of low resolution, as compared with e.g., X-ray crystallography, and low signal-to-noise ratio. This creates a need for the new visualization and analysis methods developed in this project.

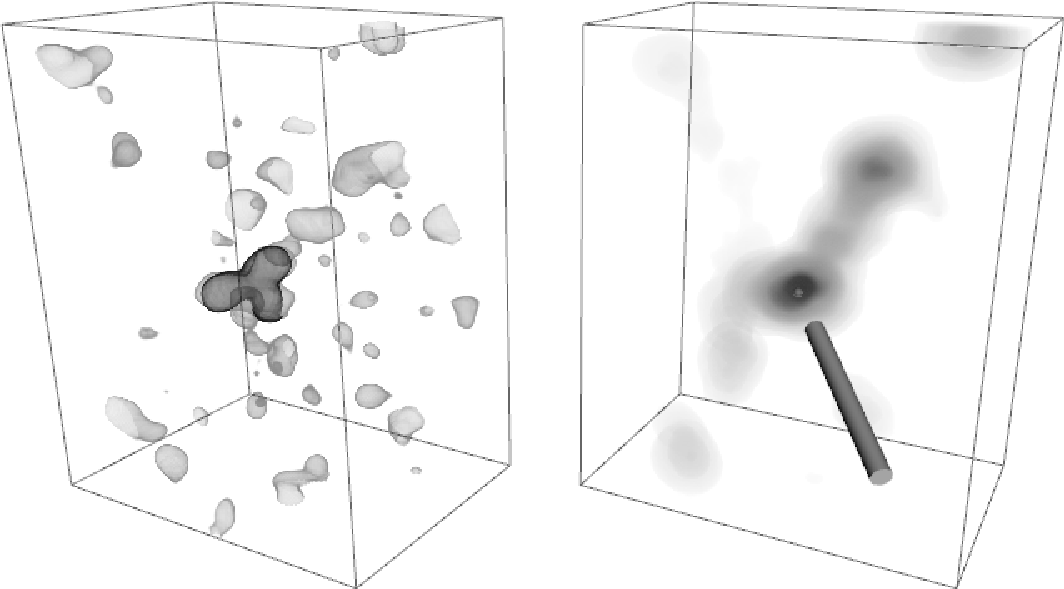

We have developed a method for automatic parameter estimation for proper visualization of MET volumes, as well as an interactive registration method where the fitness landscape is explored interactively, see example in Figure 5. A paper about the latter method was presented at the International Conference on Image Analysis and Processing (ICIAP 2011) in Italy in September. A continuation investigating different implementation techniques has been accepted to the International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, VISIGRAPP 2012. With a specialized implementation in CUDA we achieved speed increases by an order of a magnitude compared to a parallel CPU implementation.

|

Johan Nysjö, Filip Malmberg, Ewert Bengtsson, Ingela Nyström

Partners: Jan Michael Hirsch, Elias Messo, Babett Williger, Dept. of Surgical Sciences,

UU Hospital

Funding: TN-faculty, UU; NovaMedTech

Period: 0912-

Abstract: A central problem in cranio-maxillofacial (CMF) surgery is to restore the normal anatomy of the skeleton after defects, i.e., malformations, tumors and trauma to the face. This is particularly difficult when a fracture causes vital anatomical structures such as the bone segments to be displaced significantly from their proper position, when bone segments are missing, or when a bone segment is located in such a position that any attempt to restore it into its original position poses considerable risk for causing further damage to vital anatomical structures such as the eye or the central nervous system. There is ample evidence that careful pre-operative planning can significantly improve the precision and predictability and reduce morbidity of the craniofacial reconstruction. In addition, time in the operating room can be reduced. An important component in surgery planning is to be able to accurately measure the extent of certain anatomical structures. Of particular interest in CMF surgery are the shape and volume of the orbits (eye sockets) comparing the left side with the right side. These properties can be measured in CT images of the skull, but this requires accurate segmentation of the orbits. Today, segmentation is usually performed by manual tracing of the orbit in a large number of slices of the CT image. This task is very time-consuming, and sensitive to operator errors. Semi-automatic segmentation methods could reduce the required operator time significantly. In this project, we are developing a prototype of a semi-automatic system for segmenting the orbit in CT images.

In 2011, this project was presented at the International Visual Information Conference (IVIC) in Malaysia by Nystrm as invited speaker. We have also started investigating other applications for the system, e.g., volumetric measurements of the airway space in cone beam CT images.

Khalid Niazi, Ingela Nyström

Partners: M. Talal Ibrahim, Ling Guan, Ryerson Multimedia Lab, Ryerson University, Canada

Funding: COMSATS IIT, Islamabad

Period: 1003-

Abstract: Non-uniform illumination is considered as one of the major challenges in the field of medical imaging. It is often caused by the imperfections of the data acquisition device and the properties of the object under study. We have developed an iterative method which suppresses the magnitude of the frequencies that are responsible for non-uniformity in an image using the gray-weighted distance transform (GWDT). Moreover, the proposed method is not user dependent as all the parameters are automatically generated on the basis of the GWDT. It is tested on images acquired from several imaging modalities which makes it different and unique from most of the existing methods. See Figure 6.

This project was presented as part of the PhD thesis by Khalid Niazi that was defended in November 2011.

|

[]  []

[]

|

Ewert Bengtsson, Ingela Nyström

Partners: Stuart Crozier, Andrew Mehnert, School of Information Technology and Electrical Engineering, University of Queensland, Brisbane, Australia and MedTech West, Chalmers and Sahlgrenska University Hospital

Funding: TN-faculty, UU; The Australian Research Council

Period: 0503-

Abstract: The pattern of change of signal intensity over time in dynamic contrast enhanced magnetic resonance images (DCE-MRI) of the breast is an important criterion for the differentiation of malignant from benign lesions. Malignant lesions release angiogenic factors which induce the growth and sprouting of existing blood vessels and the formation of new leaky vessels. This gives rise to increased inflow and an accelerated extra-vascularisation of contrast agent at the tumour site which is reflected as T1-weighted signal increase. However strong enhancement is not specific to malignant lesions. Contrariwise shallow or no enhancement is a feature of some malignant lesions. As a result the specificity of the technique is poor to moderate. This project is seeking to improve the specificity of breast MRI, and therefore its clinical utility mainly by means of computer visualization, image analysis, and statistical pattern recognition. This collaborative project started when Bengtsson was on sabbatical at the University of Queensland in 2004-2005 and has since then produced a number of results e.g. on parametric modelling of contrast enhancement, 3D colour-coding of 4D DCE-MRI data, hardware-accelerated volume visualization and haptic interaction/interrogation of the volumetric data. Due to Mehnert's move to Sweden the activity has been low during 2011, but we have plans to continue the collaboration on the project.

Elisabeth Linnér, Robin Strand

Funding: TN-faculty, UU

Period: 1005-

Abstract: Three-dimensional images are widely used in, for example, health care. With optimal sampling lattices, the amount of data can be reduces by 30% without affecting the image quality. In this project, methods for image acquisition, analysis and visualization using optimal sampling lattices are studied and developed, with special focus on magnetic resonance imaging. The intention is that this project will lead to faster and better processing of images with less demands on data storage capacity.

A paper on aliasing errors on the fcc, bcc, and cubic grids was presented at 8th International Conference on Large-Scale Scientific Computations (LSSC), Sozopol, Bulgaria.

Johan Nysjö, Filip Malmberg, Ingela Nyström, Ida-Maria Sintorn

Partners: Albert Christersson, Dept. of Surgical Sciences, UU Hospital

Funding: TN-faculty, UU

Period: 1111-

Abstract: To be able to decide the correct treatment of a fracture, for example, whether a fracture needs to be operated on or not, it is important to assess the details about the fracture. One of the most important factors is the fracture displacement, particularly the angulation of the fracture. When a fracture is located close to a joint, for example, in the wrist, which is the most common location for fractures in the human being, the angulation of the joint line in relation to the long axis of the long bone needs to be measured. Since the surface of the joint line in the wrist is highly irregular, and since it is difficult to take X-rays of the wrist in exactly the same projections from time to time, conventional X-ray is not an optimal method for this purpose. In most clinical cases, the conventional X-ray is satisfactory for making a correct decision about the treatment, but when comparing two different methods of treatment, for example, two different operation techniques, the accuracy of the angulation of the fractures before and after the treatment has to be higher.

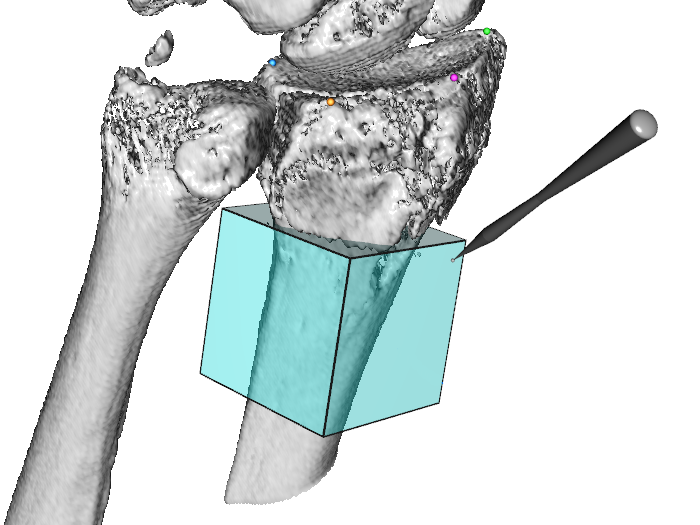

In this project, we are developing a system for measuring these angles in 3D computed tomography (CT) images of the wrist. Our proposed system is semi-automatic; the user is required to guide the system by indicating the approximate position and orientation of various parts of the radius bone. This information is subsequently used as input to an automatic algorithm that makes precise angle measurements. To facilitate user interaction in 3D, we use a system that supports 3D input, stereo graphics, and haptic feedback. Figure 7 shows a prototype of the system.

|

Ewert Bengtsson, Anders Hast

Partner: Tony Barrera, Uppsala

Funding: TN-faculty, UU

Period: 9911-

Abstract: Computer graphics is increasingly being used to create realistic images of 3D objects for applications in entertainment, (animated films, games), commerce (showing 3D images of products on the web), industrial design and medicine. For the images to look realistic high quality shading and surface texture and topology rendering is necessary. A proper understanding of the mathematics behind the algorithms can make a big difference in rendering quality as well as speed. We have in this project over the years re-examined several of the established algorithms and found new mathematical ways of simplifying the expressions and increasing the implementation speeds without sacrificing image quality. We have also invented a number of completely new algorithms. The project is carried out in close collaboration with Tony Barrera, an autodidact mathematician. It has been running since 1999 and resulted in more than 25 international publications and a PhD thesis. During 2011 we did not produce any new publications mainly due to Anders Hast sabbatical in Italy but a number of new mathematical results were obtained that are expected to lead to publications in 2012.

Stefan Seipel, Fei Liu

Funding: University of Gävle and TN Faculty, UU

Period: 110801-

Abstract: Mobile devices have recently seen an enormous advancement in their computational power with many exciting and promising pieces of technology available at the same time such as mobile graphics processing, spatial positioning, and access to geo-spatial databases. This research project in ``ubiquitous visualization'' will deal with mobile visualization of spatial data in indoor and outdoor environments. It addresses several key problems for robust mobile visualization such as spatial tracking and calibration; image based 2D and 3D registration and efficient graphical representations in mobile user interfaces. Evaluation of developed methods or techniques, mainly with respect to the human factor, will be an integral part of these studies in order to endeavor the best user experience. Application scenarios studied in this project will predominantly, but not exclusively, be in the field of urban spaces and built environment.